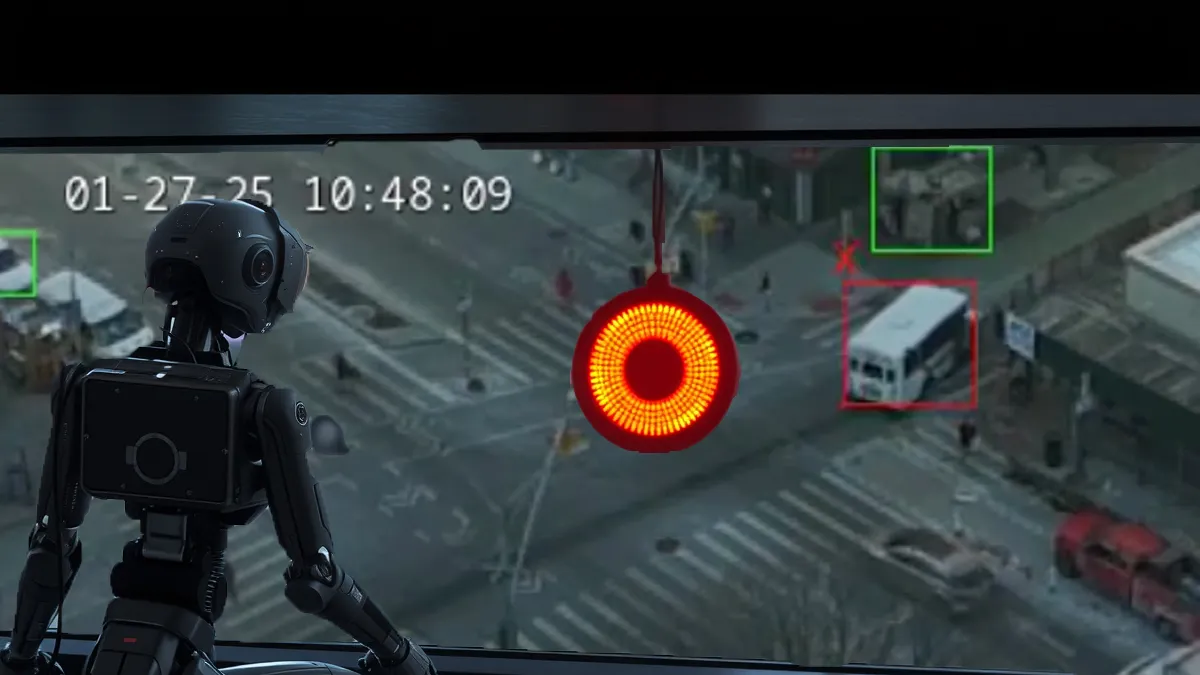

Traffi: Community Traffic Monitoring with Edge AI

By Sam CowardOutside my lab window stands a traffic intersection with a persistent problem: a no right turn sign that drivers regularly ignore. This daily observation sparked an idea - what if we could use AI to monitor and document these violations, right at the intersection itself? Enter Traffi, an inexpensive AI-powered traffic monitor that runs entirely on edge hardware.

The timing couldn’t be better. AI at the edge - running on local hardware rather than in distant datacenters - has reached an inflection point. Modern edge devices pack serious computational punch at surprisingly low costs, while Large Language Models (LLMs) dramatically accelerate development time. This combination enables solo developers to tackle projects that would have seemed daunting just a year ago. Here’s how I built Traffi in just three weeks, learning and iterating with AI as my coding partner.

AI hardware at the Edge

Raspberry Pi single board computers (SBCs) are one of the most well known platforms with plenty of support for developers. The modern Raspberry Pi 5 even sports a PCIe interface which many have welcomed, especially as a way to add M.2 NVMe storage.

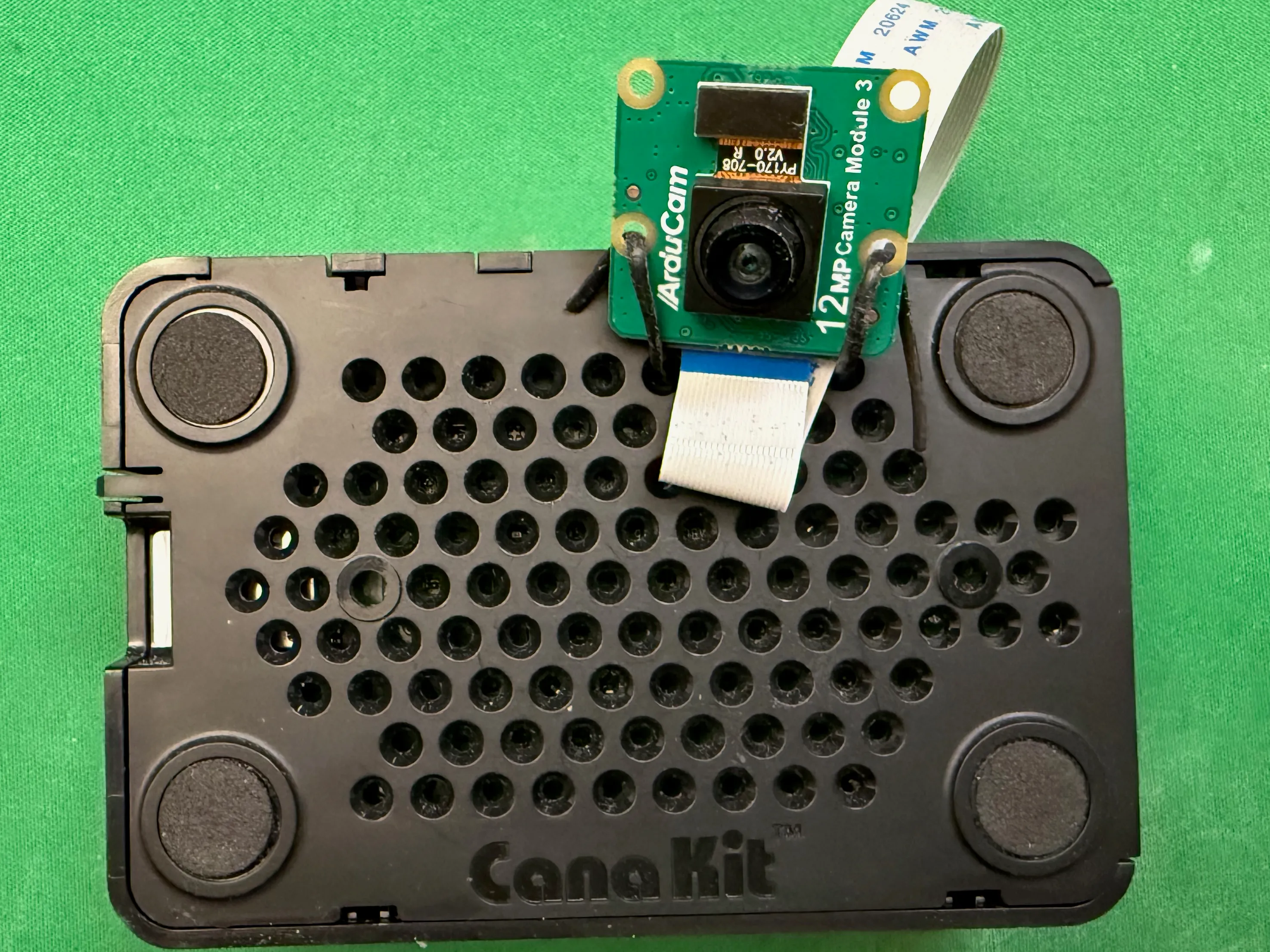

Leveraging this interface, the Raspberry Pi AI kit released in June 2024, for USD$70 provides a beefy 13TOPS using a HAILO 8L AI accelerator. An even newer HAT model is available that sports the bigger, HAILO 8 which doubles that to 26TOPS.

Attaching a simple $25 camera module, we now have all the necessary hardware. All that’s needed now is to build some software!

Pairing up with AI

AI Chatbots such as ChatGPT and Claude are known as LLMs (Large Language Models) and have many capabilities, amongst which is working with code - understanding it, writing it, changing it.

Prior to SKC Future Industries, I often worked in a pair programming setting, and so I set out framing interaction with LLMs in a similar vein as conversations with my past engineering colleagues, sharing my code as necessary in chat.

I employed a mix of models, some paid, others open source running on Ollama on my laptop:

- Claude Sonnet 3.5 (paid)

- ChatGPT 4o/o1 (paid)

- Llama 3.3 70B

- Qwen2.5-Coder 32B

Through the course of about 3 weeks, we built the following features:

- detect vehicles regardless of type (bus/truck/etc)

- detect vehicle origin side

- detect vehicle crossing to a destination side

- mark illegal vehicle crossings

- overlay time and illegal crossing count

- implement configurable intersections

- archive footage for training data

- website, with feedback form and privacy policy

- H.264 HLS (HTTP Live Streaming)

- store all detected crossings in a timestamped database

High toil code, the low hanging fruit for LLMs

I found the most profound productivity gains in tasks with high toil. For example, Claude had confidently done two rewrites as our iterative building led to new challenges.

First, once I’d realized Python wasn’t going to have the performance that I wanted, a quick discussion brought up Gstreamer, a solution of pluggable streaming components which could do most of the generic tasks. I needed only to supply the application specific behavior in a dynamic library. Without much effort, it turned the Python code into a bash script and C++ files for the library. A makefile later, we are back in business with barely a hitch.

Second, some hours later, I discover that customizing filesystem storage

would require more C++ code to generate file paths with the date

structure I wanted via what Gstreamer calls ‘signals’ which would also

require a pointer to the splitmuxsink component. I knew the bash

script which was merely configuring the component wasn’t going to cut it. So

I pasted the code again and asked it to rewrite it all in C++, and it worked,

first time. The glee I felt seemed almost criminal.

Now, each time, I am confident that I, too, could have done the conversion, but the LLMs speed is unmatched by several orders of magnitude, even if I am to account for debugging and fixing the inevitable mistakes, which I myself am also prone to making. Probably moreso with some unfamiliar technology.

It’s going to be essential for folks to recalibrate their assumptions in estimation of toil. A good many software projects are engineered to an insane degree to be hyper-malleable, because there is a both a strong incentive to be flexible to make changes going forward, and avoid rewrites.

Mundane Code As a Service

Another example: At some point, I wanted hot loaded configuration. I write a spec for what I want in about a minute or two, and Claude bangs it out in about 10 seconds. Oh, what, it’s polling the filesystem? I respond telling it to use filesystem notifications instead, and then another 10 seconds later it’s done. With that mundane code out of the way, I had more time to think about and work on the core product, amazing!

Sounding Boards

I also engaged the LLMs in open ended discussions. Much like I would conduct the conversation with a fellow engineer, I would explain the context, my goals, current ideas and then solicit advice. Whilst this can be great for reorienting, it is also one area where I noticed model output can go off the rails and you really have to be critical of what it produces. It was such a discussion which originally brought Gstreamer to my attention.

Hell is other people, and LLMs

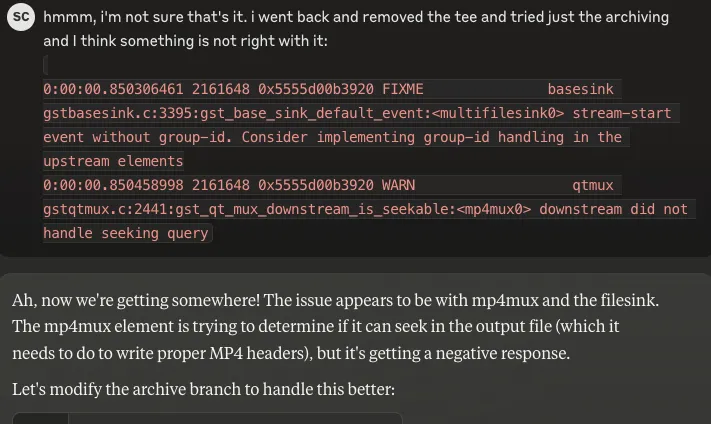

Despite all these positive interactions, there are some moments where things just break down. Debugging is a good example. Sometimes able to pinpoint exact config errors like a circa 2015 stack overflow search hit, and at other times, utterly convinced that misguided solutions will work. You can even vent back at the model “But that won’t work, here’s why …” and then wonder if it’s just time for a coffee break when it opens with some obsequious apology, affirms how right you are, before reiterating the same misguided solution or some other bastard chimera.

While these pits of doom happen with all the models, it does tend to happen more with the open models. Nevertheless, I find it encouraging that these freely downloadable models perform as good as they do, and I have found them useful on long flights without Internet (which hopefully won’t be a thing for much longer!).

You can sometimes finesse the prompt and context to get the model to do what you want. Other times, you’ll have to roll up your sleeves and do some of the dirty work yourself.

Even in these dire cases, you can still get the LLM to help you with what you don’t know. For instance, it once could not remedy a set of changes after several loops, so I dismantled things a bit until I was able to get some logs, which, keep in mind, as I’m a few hours new to the Gstreamer API at this point, I don’t really know how to read well.

But Claude did, and this new information enabled the model to produce a useful result moving us forward. I know it’s all math on GPUs, but it’s hard not to feel the warm fuzzy Eureka moments when you’ve collectively made a breakthrough while working with LLMs.

Reflections post MVP

Overall, in working with AI to build the MVP, I am impressed with their capability. Whilst they still need an experienced engineer to both verify, test and integrate their outputs, they can reduce a lot of programming toil. By far, Claude produced the most usable outputs, especially over entire files or multiple files.

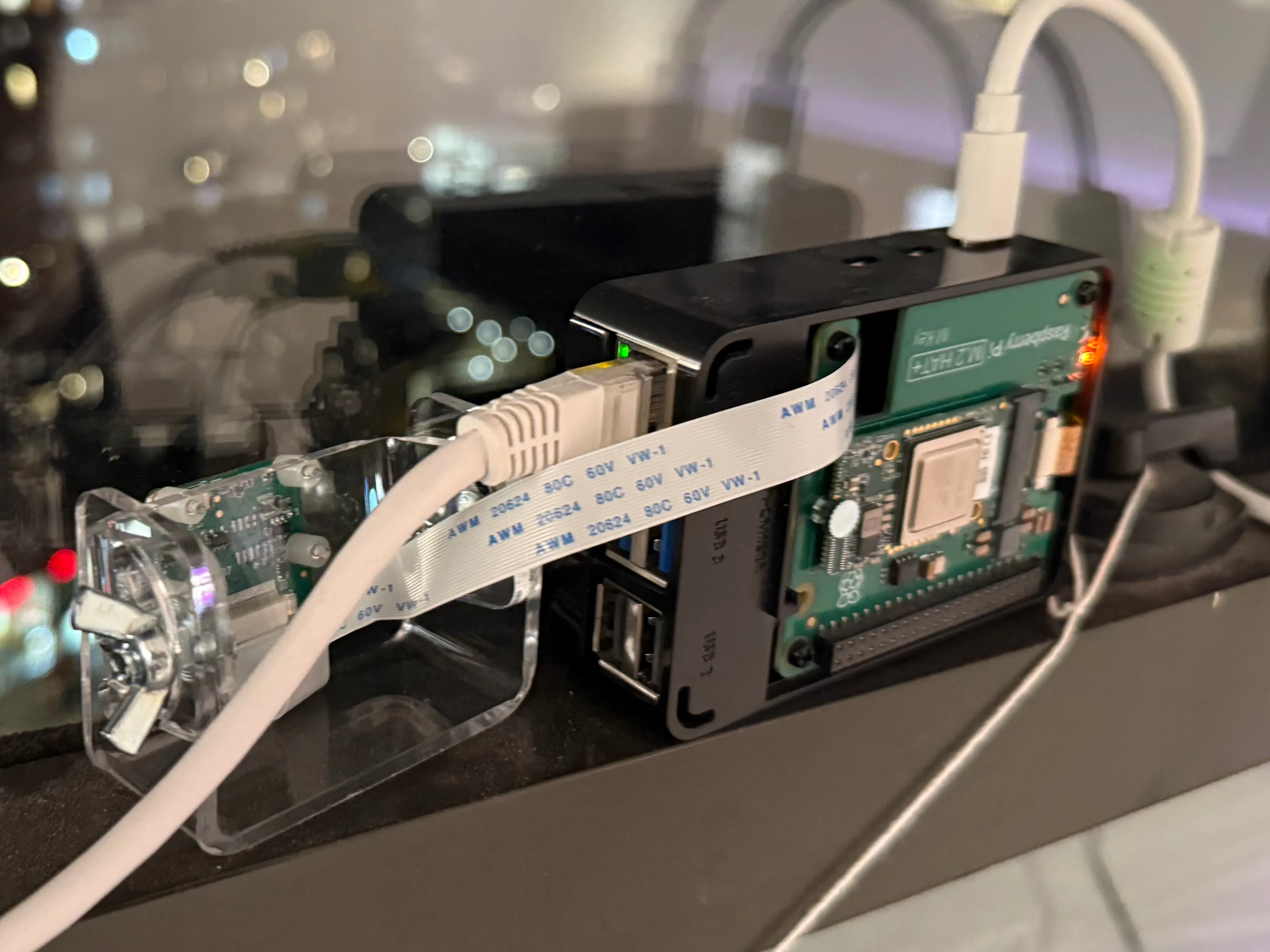

With help of AI, I was ultimately able to build the first Traffi which now runs live at https://traffi.skcfi.dev, learning a lot along the way in just a few short weeks. Here you see it mounted in the window, with a plexiglass mount to hold the camera. This keeps it steady which avoids the need to constantly calibrate the camera to the intersection.

It consumes about 7W power, and streams 432x240 video at 24fps with about 10s lag from the real world when viewed in my browser. Thanks to the HAILO, the Pi’s CPU usage is only around 20-30% despite running the AI inference.

This experience clearly tells me that AI undoubtedly increases my productivity, and helps bring diversity of thoughts and ideas into the coding process for solo builders. I doubt if I’d started again without AI and the learnings I made along the way that I would have been able to learn and execute as quickly.

Lessons Learned

This project was very rewarding. I achieved my goal of practical hands on experience building and validating HAILO 8L + Raspberry Pi 5 for this application, easily handling:

- Processing 24FPS 432x240 into both an archive and HTTP live stream

- Serving live stream via Cloudflare. Hopefully this will hold up with cache headers!

- all this with only 7W consumption!

Learnings from working with LLMs:

- LLMs open and closed can work well as ‘coding pairs’

- Open models still aren’t as good, but they’re catching up and can run on your laptop

- Claude excels at larger changes, conversions

- Can get stuck in some frustrating pits

- You still have to bring your experience to the table and be critical

What comes next

For Traffi, the rough plan for now is:

- label domain specific footage to develop an evaluation set

- train base model on domain specific footage

- add reports and charts, e.g. daily totals, frequency histograms

- expanding community involvement

Any feedback is welcome, over at https://traffi.skcfi.dev

AI tooling continues to explode in growth, and I’m constantly trying new models and tools to build and work even faster. Note that I opted to keep things simple and just use chat when bootstrapping here, which works fine if your app is just a few files.

Stay tuned for more posts about my experiences building with, using and for AI.

Links

Hardware

LLMs